WhatsThis

WhatsThis

There were two goals for WhatsThis.

The first goal was all about the functionality :

• Use AI image recognition to analyze a picture taken by the camera.

• Speak the name of the image

• All operation should be seamless within the app. A user should not have to exit the app to take a picture.

• It should operate where there's no internet connection.

The second goal was to show people that AI is not intimidating. So often, AI is considered intimidating to people, even though AI is all around us, more and more. So WhatsThis needed to make AI approachable, and even friendly. The UX should be intuitive and easy to use, and have an attractive UI. What it does is something that anyone can relate to - take a picture and hear its name. Even the logo looks like a friendly character. In fact, his name is 'Artie'.

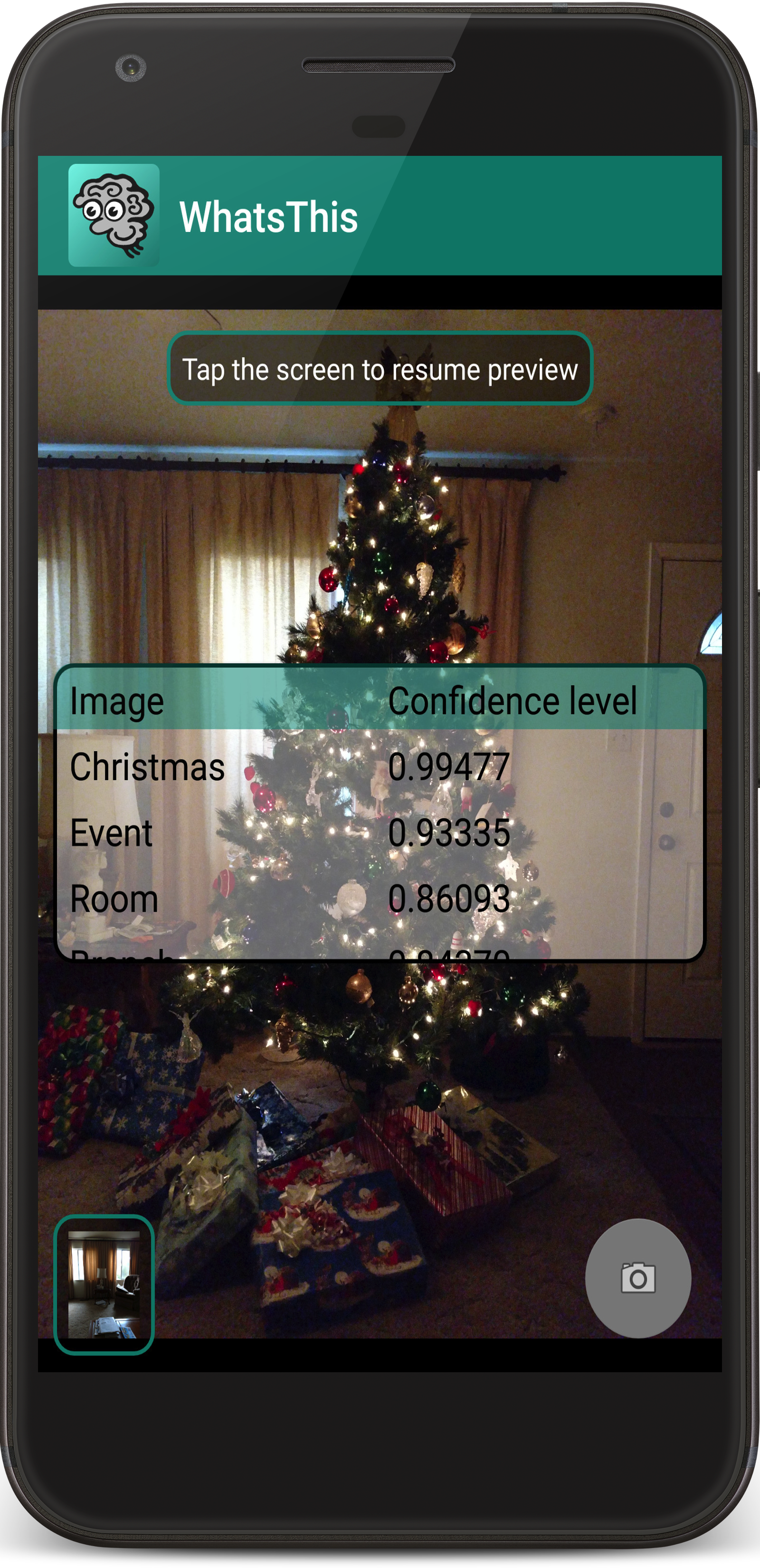

To fulfill these goals, Firebase Machine Learning API's is used to return a list of recognized objects within an image. The ML API also returns a confidence level for each object indicating how confident it is, in its recognition of that object. TextToSpeech API's are used to speak the name of the image that has the highest confidence.

Because of the requirement that the app needs to be able to operate where there is no internet connection, ML's on-device image labeling is used, rather than the ML Cloud based image labeling. On-device image labeling has high performance, so the response is fast.

Careful attention was put into the UX. WhatsThis uses a custom camera so all functionality is seamless within the app. The custom camera uses a live video preview for smooth performance. When the user wants to take a picture they press the camera button, and the captured image is shown in a thumbnail image on the screen. When the user wants to take another picture they can simply touch the screen after being shown a small prompt.

Get started with a free technical consultation